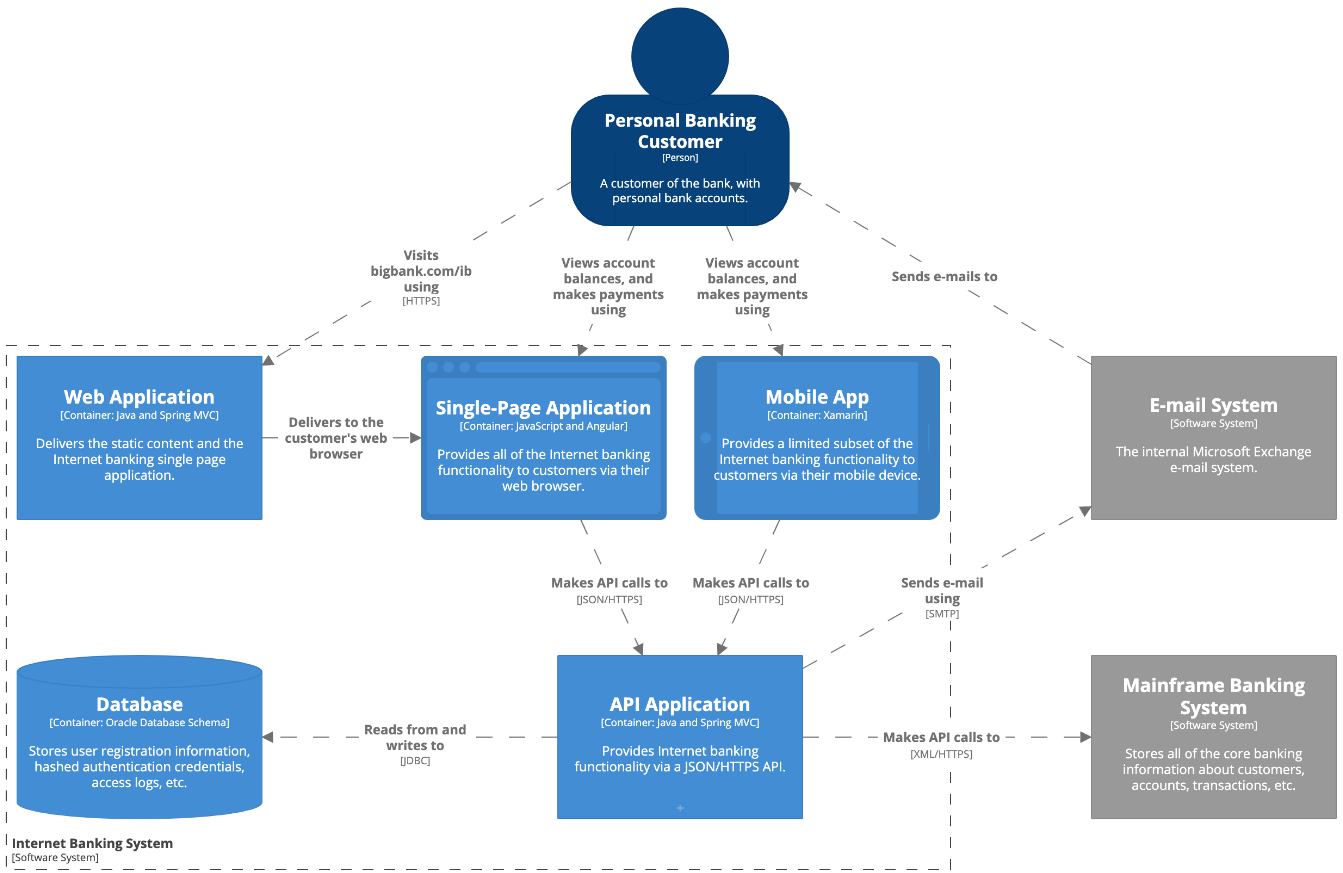

J’ai découvert il y a quelques temps le C4 model. C’est une méthodologie permettant de modéliser et documenter l’architecture logicielle d’un système logiciel. Cela m’a tout de suite intrigué et pourra intéresser ceux qui connaissent un peu l’état de l’art sur ce sujet.

Transmodel is the basis for defining exchange standards that enable the sharing and provision of accurate and interoperable public transport information across organisation- and system-boundaries.

Bank Python implementations are effectively proprietary forks of the entire Python ecosystem which are in use at many (but not all) of the biggest investment banks. Bank Python differs considerably from the common, or garden-variety Python that most people know and love (or hate).

I've said so far that a lot of data is stored in Barbara. Time to drop a bit of a bombshell: the source code is in Barbara too, not on disk. Remain composed. It's kept in a special Barbara ring called sourcecode.

it's possible to sit down, write a script and get it running in prod within the hour, which is a big deal.

Using simple Python functions, in a source controlled system, is a better middle ground than the modern-day equivalent of J2EE.

One thing I regret about software as a field is how little time is spent learning from existing systems and judging what they did well, or badly. There are only a small number of books discussing, in detail, real systems that exist.

Let me tell you about the still-not-defunct real-time log processing pipeline we built at my now-defunct last job. It handled logs from a large number of embedded devices that our ISP operated [...] Eventually our team's log processing system evolved to become the primary monitoring and alerting infrastructure for our ISP.

you mostly get told that you shouldn't be using unstructured logs anyway, you should be using event streams.

That advice is not wrong, but it's incomplete.There's a file called /dev/kmsg which, if you write to it, produces messages into the kernel's buffer. Let's do that! For all our messages!

RAM is even more volatile than disk, and you have to reboot after a kernel panic. So the RAM is gone, right? Well, no. Sort of. Not exactly.

have the client to stream logs to the server. This is possible using HTTP POST and Chunked encoding,

The log uploader uses a backoff timer so that if it's been trying to upload for a while, it uploads less often. However, the backoff timer was limited to no more than the usual inter-upload interval.

Someone probably told you that log messages are too slow, or too big, or too hard to read, or too hard to use, or you should use them while debugging and then delete them. All those people were living in the past and they didn't have a fancy log pipeline. Computers are really, really fast now. Storage is really, really cheap.

How much are you paying for someone to run some bloaty full-text indexer on all your logs, to save a few milliseconds per grep?